Computer Science

Database Replication

Database replication is the process of copying data from one database to another, ensuring that both databases are consistent and up-to-date. This is done to improve performance, increase availability, and provide redundancy in case of failures. It is commonly used in distributed systems and high-availability environments.

Written by Perlego with AI-assistance

Related key terms

1 of 5

9 Key excerpts on "Database Replication"

- eBook - ePub

- John Garmany, Jeff Walker, Terry Clark(Authors)

- 2005(Publication Date)

- Auerbach Publications(Publisher)

8 DESIGNING REPLICATED DATABASES

Database Replication is the copying of part or all of a database to one or more remote sites. The remote site can be in another part of the world, or right next to the primary site. Because of the speed and reliability of the Internet, many people believe that Database Replication is no longer needed. While it is true that distributed transactions provide access to real-time data almost anywhere in the world, they require that all databases operate all the time. When one database is down for any reason or cannot be contacted, other databases can no longer access its data. Replication solves this problem because each site has a copy of the data.When a query accesses data on multiple databases, it is called a distributed query. Applications using distributed queries are difficult to optimize because each brand (in fact, different versions of the same brand) of database handles the execution of the query differently. Sometimes, the local database will retrieve data from the remote database and process it locally. This can result in high network bandwidth use and slow query performance. More modern databases will analyze the query and send a request to the remote database for only that information it needs—greatly reducing network traffic, but also making performance tuning very complicated. Either way, distributed queries always contain some network latency time. If this response delay becomes unacceptable, replicating the databases may be the solution. Querying local data is always faster than accessing remote data.Replication of all or part of a database is becoming increasingly common as companies consolidate servers and information. Many companies use some form of replication to load data into the company data warehouse. It can also be used to create a separate database for reporting, thereby removing the impact of data aggregation from the main database. Many companies use replication to create a subset of data that is accessed by another application, a Web site for example. By replicating only a subset of the data, or using non-updateable replication, they can protect sensitive information while allowing users to have access to up-to-date data. - eBook - ePub

- Philip A. Bernstein, Eric Newcomer(Authors)

- 2009(Publication Date)

- Morgan Kaufmann(Publisher)

Chapter 9 Replication9.1 Introduction

Replication is the technique of using multiple copies of a server or a resource for better availability and performance. Each copy is called a replica .The main goal of replication is to improve availability, since a service is available even if some of its replicas are not. This helps mission critical services, such as many financial systems or reservation systems, where even a short outage can be very disruptive and expensive. It helps when communications is not always available, such as a laptop computer that contains a database replica and is connected to the network only intermittently. It is also useful for making a cluster of unreliable servers into a highly-available system, by replicating data on multiple servers.Replication can also be used to improve performance by creating copies of databases, such as data warehouses, which are snapshots of TP databases that are used for decision support. Queries on the replicas can be processed without interfering with updates to the primary database server. If applied to the primary server, such queries would degrade performance, as discussed in Section 6.6 , Query-Update Problems in two-phase locking.In each of these cases, replication can also improve response time. The overall capacity of a set of replicated servers can be greater than the capacity of a single server. Moreover, replicas can be distributed over a wide area network, ensuring that some replica is near each user, thereby reducing communications delay.9.2 Replicated Servers

The Primary-Backup Model

To maximize a server’s availability, we should try to maximize its mean time between failures (MTBF) and minimize its mean time to repair (MTTR). After doing the best we can at this, we can still expect periods of unavailability. To improve availability further requires that we introduce some redundant processing capability by configuring each server as two server processes: a primary server that is doing the real work, and a backup server that is standing by, ready to take over immediately after the primary fails (see Figure 9.1 - eBook - PDF

Fundamentals of Grid Computing

Theory, Algorithms and Technologies

- Frederic Magoules(Author)

- 2009(Publication Date)

- Chapman and Hall/CRC(Publisher)

Data replication in grid environments 69 3.2.1 Replication in databases Although replication is not a central emphasis of the database community, interest in dynamic data replications in distributed database management systems continues to grow due to the need to improve scalability, data avail-ability, and fault tolerance. There are many similarities in the replication techniques developed from both the database community and the distributed system community. In [Wiesmann et al., 2000a ], a model allowing us to com-pare and distinguish replication solutions developed for these two perspectives is provided. A key difference of replication solutions in these communities is the nature of replication protocols used [Wiesmann et al., 2000a ], [Wies-mann et al., 2000b ]. Since the probability of failures in distributed systems is usually higher than database systems, e.g., various hardware and software resources involved might crash, replication in distributed community puts em-phasis on fault tolerant systems, i.e., keeping the systems running in case of these failures or data unavailability. On the contrary, the main focus of repli-cation in database systems is to maintain consistency of replicated data and ensure data safety for better application performance. Replication techniques for database systems depend on the update frequency and user access/update patterns on data stored in these databases. If there are more updates in the database, keeping the replicas consistent with each other becomes more costly. In this case, a replication strategy that strictly controls the number of newly created replicas is preferable. Otherwise, wide replication is more profitable as access to data held locally is faster than remote access through wide area network. The most simple replication protocol in database systems is read-one-write-all (ROWA) protocol, which provides an optimistic degree of data consistency. - Brian Knight, Ketan Patel, Wayne Snyder, Ross LoForte, Steven Wort(Authors)

- 2011(Publication Date)

- Wrox(Publisher)

Chapter 16 ReplicationToday's enterprise needs to distribute its data across many departments and geographically dispersed offices. SQL Server replication provides ways to distribute data and database objects among its SQL Server databases, databases from other vendors such as Oracle, and mobile devices such as Pocket PC and point-of-sale terminals. Along with log shipping, database mirroring, and clustering, replication provides functionalities that satisfy customers' needs for load balancing, high availability, and scaling.This chapter introduces you to the concept of replication, explaining how to implement basic snapshot replication, and noting things to pay attention to when setting up transactional and merge replication.Replication OverviewSQL Server replication closely resembles the magazine publishing industry, so we'll use that analogy to explain its overall architecture. Consider National Geographic . The starting point is the large pool of journalists writing articles . From all the available articles, the editor picks which ones will be included in the current month's magazine. The selected set of articles is then published in a publication . Once a monthly publication is printed, it is shipped out via various distribution channels to subscribers all over the world.In SQL Server replication, similar terminology is used. The pool from which a publication is formed can be considered a database. Each piece selected for publication is an article ; it can be a table, a stored procedure, or another database object. Like a magazine publisher, replication also needs a distributor- eBook - ePub

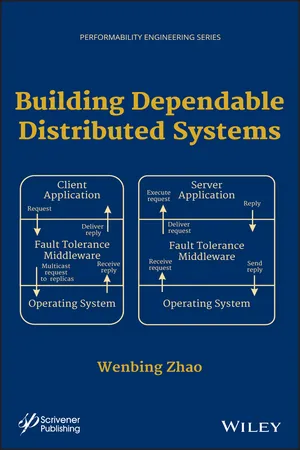

- Wenbing Zhao(Author)

- 2014(Publication Date)

- Wiley-Scrivener(Publisher)

Chapter 4

Data and Service Replication

Different from checkpointing/logging and recovery-oriented computing, which focus on the recovery of an application should a fault occur, the replication technique offers another way of achieving high availability of a distributed service by masking various hardware and software faults. The goal of the replication technique is to extend the mean time to failure of a distributed service. As the name suggests, the replication technique resorts to the use of space redundancy, i.e., instead of running a single copy of the service, multiple copies are deployed across a group of physical nodes for fault isolation. For replication to work (i.e., to be able to mask individual faults), it is important to ensure that faults occur independently at different replicas.The most well-known approach to service replication is state-machine replication [26]. In this approach, each replica is modeled as a state machine that consists of a set of state variables and a set of interfaces accessible by the clients that operate on the state variables deterministically. With the presence of multiple copies of the state machine, the issue of consistency among the replicas becomes important. It is apparent that the access to the replicas must be coordinated by a replication algorithm so that they remain consistent at the end of each operation. More specifically, a replication algorithm must ensure that a client’s request (that invokes on one of the interfaces defined by the state machine) reaches all non-faulty replicas, and all non-faulty replicas must deliver the requests (that potentially come from different clients) in exactly the same total order. It is important that the execution of a client’s request is deterministic, i.e., given the same request, the same response will be generated at all non-faulty replicas. If an application contains nondeterministic behavior, it must be i.e., rendered deterministic by controlling such behavior, e.g. - eBook - ePub

- Wenbing Zhao(Author)

- 2021(Publication Date)

- Wiley-Scrivener(Publisher)

4 Data and Service ReplicationDifferent from checkpointing/logging and recovery-oriented computing, which focus on the recovery of an application should a fault occur, the replication technique offers another way of achieving high availability of a distributed service by masking various hardware and software faults. The goal of the replication technique is to extend the mean time to failure of a distributed service. As the name suggests, the replication technique resorts to the use of space redundancy, i.e., instead of running a single copy of the service, multiple copies are deployed across a group of physical nodes for fault isolation. For replication to work (i.e., to be able to mask individual faults), it is important to ensure that faults occur independently at different replicas.The most well-known approach to service replication is state-machine replication [26]. In this approach, each replica is modeled as a state machine that consists of a set of state variables and a set of interfaces accessible by the clients that operate on the state variables deterministically. With the presence of multiple copies of the state machine, the issue of consistency among the replicas becomes important. It is apparent that the access to the replicas must be coordinated by a replication algorithm so that they remain consistent at the end of each operation. More specifically, a replication algorithm must ensure that a client’s request (that invokes on one of the interfaces defined by the state machine) reaches all non-faulty replicas, and all non-faulty replicas must deliver the requests (that potentially come from different clients) in exactly the same total order. It is important that the execution of a client’s request is deterministic, i.e., given the same request, the same response will be generated at all non-faulty replicas. If an application contains nondeterministic behavior, it must be i.e., rendered deterministic by controlling such behavior, e.g., - eBook - PDF

- Andrzej Marian Goscinski, Horace Ho Shing Ip, Wei-jia Jia(Authors)

- 2000(Publication Date)

- World Scientific(Publisher)

In comparison to other protocols, TDGS requires lower communication cost for an operation while providing higher data availability. 1 Introduction Distributed database (DDB) system technology is one of the major recent developments in the database area, where it moves from centralization which resulted in monolithic gigantic databases towards more decentralization and autonomy of processing [11]. With advances in distributed processing and distributed computing that occurred in the operating systems arena, the database research community did considerable work to address the issues of data distribution, distributed query processing, distributed transactions management and etc HOI One of the major issue in data distribution is replicated dal management Typical replicated data management parameters are data availability and communication costs: the higher the data availability with lower communication costs the better is. Replication is a useful technique for distributed database systems where a data item will be accessed (i.e., read and written) from multiple locations that may be geographically distributed world wide or in local area network environment. For example Financial instruments' prices will be read and updated from all over the 163 world [18] . Student's results, will be read and updated/modified from lecturers throughout departments. By storing multiple copies of data at several sites in the system, there is an increased data availability and accessibility to users despite site and communication failures. It is an important mechanism because it enables organizations to provide users with access to current data where and when they need it. However, expensive synchronization mechanisms are needed to maintain the consistency and integrity of data [1]. - eBook - ePub

Handbook of Data Management

1999 Edition

- Sanjiv Purba(Author)

- 2019(Publication Date)

- Auerbach Publications(Publisher)

It is also possible to address the concurrency control issues within the application design itself by designing the application to avoid conflicts or by compensating for conflicts when they occur. Conflict avoidance ensures that all transactions are unique — that updates only originate from one site at a time. Schemas can be fragmented in such a manner as to ensure conflict avoidance. In addition, each site can be assigned a slice of time for delivering updates, thereby avoiding conflicts by establishing a business practice.Special Considerations for Disaster Recovery

One of the most common uses of replication is to replicate transactions to a backup system for the purpose of disaster recovery. However, users are advised to pay close attention to several issues relating to the use of replication for disaster recovery.In a high-volume transaction environment, many transactions that have occurred at the primary system may be lost in flight and will need to be reentered into the backup system. When the primary fails, how will the lost transactions be identified so that they can be reentered? How will the users be switched over to the backup? The backup system will have a different network address, requiring users to manually log in to the backup and restart their applications.Is there a documented process for users and administrators to follow in the event of a disaster? Once the primary is recovered, is there a clean mechanism for switching users back to the primary? In the best-case scenario, it should be possible to switch the roles of the systems, making the backup the new primary and making the primary the new backup once it is recovered. This practice eliminates the need to switch all users back to the original configuration.Special Consideration for Network Failures

Although the availability of data is enhanced through replication because there is a local copy of data, users must understand the implications of extended network failures. Network failures can cause replicate databases to quickly drift apart because local updates are not being propagated. If the application is sensitive to the sequence of transactions originating at various replicate sites, then extended network failures may cause local processing to shut down, nullifying any perceived benefit to data availability through replication. - Patrizio Pelliccione, Henry Muccini, Alexander Romanovsky(Authors)

- 2007(Publication Date)

- World Scientific(Publisher)

Firstly, it requires access to source code. This means that only the database vendor will be able to implement it. Secondly, it is typically tightly integrated with the implementation of the regular database functionality, in order to take advantage of the many optimizations performed within the database kernel. While this might lead to better performance, it re- sults in the creation of inter-dependencies between the replication code and the other database modules, which is hard to maintain in a continuously evolving codebase. Recent research proposals have looked at replication solutions outside the d a t a b a ~ e , ~ -l ~ typically as a middleware layer. However, nearly none of them is truly a black-box approach in which the database is used exclu- sively based on its user interface since this would lead to very simplistic and inefficient replication mechanisms. Instead, some solutions require specific information from the application. For instance, they parse incoming SQL statements in order to determine the tables accessed by an operation.? This allows performing simple concurrency control at the middleware layer. More stringent, some solutions require the first command within a transaction to indicate whether the transaction is read-only or an update transaction,13 or to indicate the tables that are going to be accessed by the transac- tion.8,9j15 Nevertheless, many do not require any additional functionality from the database system itself. However, this can lead to inefficiencies. For instance, it requires update operations or even entire update transactions to be executed at all replicas which results in limited scalability. We term this approach symmetric processing. An alternative is asymmetric process- ing of updates that consists in executing an update transaction at any of the replicas and then propagating and applying only the updated tuples at the remaining replicas. Reference 16 shows that the asymmetric approach h approach.1-4

Index pages curate the most relevant extracts from our library of academic textbooks. They’ve been created using an in-house natural language model (NLM), each adding context and meaning to key research topics.