Computer Science

Threading In Computer Science

Threading is a technique used in computer science to allow multiple tasks to be executed concurrently within a single process. It involves creating multiple threads of execution within a program, each of which can run independently and perform different tasks simultaneously. Threading is commonly used in applications that require high performance and responsiveness, such as video games and web servers.

Written by Perlego with AI-assistance

Related key terms

1 of 5

10 Key excerpts on "Threading In Computer Science"

- eBook - PDF

Fundamentals of Python

First Programs

- Kenneth Lambert(Author)

- 2018(Publication Date)

- Cengage Learning EMEA(Publisher)

Client and server threads can run concurrently on a single computer or can be distributed across several computers that are linked in a network . The technique of using multiple threads in a program is known as mul-tithreading. This chapter offers an introduction to multithreading, networks, and client/ server programming. We provide just enough material to get you started with these topics; more complete surveys are available in advanced computer science courses. Threads and Processes You are well aware that an algorithm describes a computational process that runs to com-pletion. You are also aware that a process consumes resources, such as CPU (central pro-cessing unit) cycles and memory. Until now, we have associated an algorithm or a program with a single process, and we have assumed that this process runs on a single computer. However, your program’s process is not the only one that runs on your computer, and a single program could describe several processes that could run concurrently on your com-puter or on several networked computers. The following historical summary shows how this is the case. Time-sharing operating systems : In the late 1950s and early 1960s, computer scientists developed the first time-sharing operating systems. These systems allow several programs to run concurrently on a single computer. Instead of giving their programs to a human scheduler to run one after the other on a single machine, users log in to the computer via remote terminals. They then run their programs and have the illusion, if the system per-forms well, of having sole possession of the machine’s resources (CPU, disk drives, printer, etc.). Behind the scenes, the operating system creates separate processes for these pro-grams. The system gives each process a turn at the CPU and other resources, and it per-forms all the work of scheduling. - eBook - ePub

Parallel Programming with C# and .NET Core

Developing Multithreaded Applications Using C# and .NET Core 3.1 from Scratch

- Rishabh Verma, Rishabh Verma, Neha Shrivastava, Ravindra Akella(Authors)

- 2020(Publication Date)

- BPB Publications(Publisher)

Universal Windows Platform (UWP) or Xamarin, and so on, have to deal with high CPU consuming operations or operations that may take too long to complete. While the user waits for the operation to complete, the application UI should remain responsive to the user actions. You may have seen that dreadful "Not responding" status on one of the applications. This is a classic case of the Main UI thread getting blocked. Proper use of threading can offload the main UI thread and keep the application UI responsive.- Handling concurrent requests in server : When we develop a web application or Web API hosted on one or many servers, they may receive a large number of requests from different client applications concurrently. These applications are supposed to cater to these requests and respond in a timely fashion. If we use the ASP.NET/ASP.NET Core framework, this requirement is handled automatically, but threading is how the underlying framework achieves it.

- Leverage the full power of the multi-core hardware : With the modern machines powered with multi-core CPUs, effective threading provides a means to leverage the powerful hardware capability optimally.

- Improving performance by proactive computing : Many times, the algorithm or program that we write requires a lot of calculated values. In all such cases, it's best to calculate these values before they are needed, by computing them in parallel . One of the great examples of this scenario is 3D animation for a gaming application.

Now that we know the reason to use threading, let us see what it is.What is threading?

Let's go back to our "human body" example. Each subsystem works independently of another, so even if there is a fault in one, another can continue to work (at least to start with). Just like our body, the Microsoft Windows operating system is very complex. It has several applications and services running independently of each other in the form of processes. A process is just an instance of the application running in the system, with dedicated access to address space, which ensures that data and memory of one process don't interfere with the other. This isolated process ecosystem makes the overall system robust and reliable for the simple reason that one faulting or crashing process cannot impact another. The same behavior is desired in any application that we develop as well. It is achieved by using threads, which are the basic building blocks for threading in the world of Windows and .NET. - No longer available |Learn more

- (Author)

- 2014(Publication Date)

- College Publishing House(Publisher)

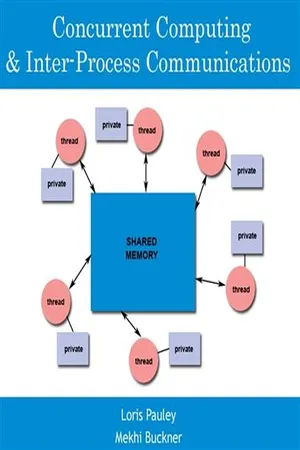

________________________ WORLD TECHNOLOGIES ________________________ Chapter 9 Thread (Computer Science) and Communicating Sequential Processes Thread (computer science) A process with two threads of execution In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. It generally results from a fork of a computer program into two or more concurrently running tasks. The implementation of threads and pro-cesses differs from one operating system to another, but in most cases, a thread is ________________________ WORLD TECHNOLOGIES ________________________ contained inside a process. Multiple threads can exist within the same process and share resources such as memory, while different processes do not share these resources. In particular, the threads of a process share the latter's instructions (its code) and its context (the values that its variables reference at any given moment). To give an analogy, multiple threads in a process are like multiple cooks reading off the same cook book and following its instructions, not necessarily from the same page. On a single processor, multithreading generally occurs by time-division multiplexing (as in multitasking): the processor switches between different threads. This context switching generally happens frequently enough that the user perceives the threads or tasks as running at the same time. On a multiprocessor or multi-core system, the threads or tasks will actually run at the same time, with each processor or core running a parti-cular thread or task. Many modern operating systems directly support both time-sliced and multiprocessor threading with a process scheduler. The kernel of an operating system allows prog-rammers to manipulate threads via the system call interface. Some implementations are called a kernel thread , whereas a lightweight process (LWP) is a specific type of kernel thread that shares the same state and information. - No longer available |Learn more

- (Author)

- 2014(Publication Date)

- Learning Press(Publisher)

________________________ WORLD TECHNOLOGIES ________________________ Chapter 5 Thread (Computer Science) and Communicating Sequential Processes Thread (computer science) A process with two threads of execution. In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. It generally results from a fork of a computer program into two or more concurrently running tasks. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is ________________________ WORLD TECHNOLOGIES ________________________ contained inside a process. Multiple threads can exist within the same process and share resources such as memory, while different processes do not share these resources. In particular, the threads of a process share the latter's instructions (its code) and its context (the values that its variables reference at any given moment). To give an analogy, multi-ple threads in a process are like multiple cooks reading off the same cook book and following its instructions, not necessarily from the same page. On a single processor, multithreading generally occurs by time-division multiplexing (as in multitasking): the processor switches between different threads. This context switching generally happens frequently enough that the user perceives the threads or tasks as running at the same time. On a multiprocessor or multi-core system, the threads or tasks will actually run at the same time, with each processor or core running a parti-cular thread or task. Many modern operating systems directly support both time-sliced and multiprocessor threading with a process scheduler. The kernel of an operating system allows pro-grammers to manipulate threads via the system call interface. Some implementations are called a kernel thread , whereas a lightweight process (LWP) is a specific type of kernel thread that shares the same state and information. - No longer available |Learn more

- (Author)

- 2014(Publication Date)

- Academic Studio(Publisher)

________________________ WORLD TECHNOLOGIES ________________________ Chapter 5 Thread (Computer Science) and Communicating Sequential Processes Thread (computer science) A process with two threads of execution. In computer science, a thread of execution is the smallest unit of processing that can be scheduled by an operating system. It generally results from a fork of a computer program into two or more concurrently running tasks. The implementation of threads and processes differs from one operating system to another, but in most cases, a thread is ________________________ WORLD TECHNOLOGIES ________________________ contained inside a process. Multiple threads can exist within the same process and share resources such as memory, while different processes do not share these resources. In particular, the threads of a process share the latter's instructions (its code) and its context (the values that its variables reference at any given moment). To give an analogy, multiple threads in a process are like multiple cooks reading off the same cook book and following its instructions, not necessarily from the same page. On a single processor, multithreading generally occurs by time-division multiplexing (as in multitasking): the processor switches between different threads. This context switching generally happens frequently enough that the user perceives the threads or tasks as running at the same time. On a multiprocessor or multi-core system, the threads or tasks will actually run at the same time, with each processor or core running a particular thread or task. Many modern operating systems directly support both time-sliced and multiproce- ssor threading with a process scheduler. The kernel of an operating system allows programmers to manipulate threads via the system call interface. Some implementations are called a kernel thread , whereas a lightweight process (LWP) is a specific type of kernel thread that shares the same state and information. - eBook - PDF

.NET Programming with Visual C++

Tutorial, Reference, and Immediate Solutions

- Max Fomitchev(Author)

- 2003(Publication Date)

- CRC Press(Publisher)

CHAPTER 5 Multithreaded Programming with .NET In Depth Multithreading is the ability of your code to perform multiple computations simultaneously. Unlike multitasking , multithreading requires that a single program perform various tasks in parallel. Multitasking, on the other hand, requires that an operating system execute multiple programs or tasks in parallel. Both multitasking and multithreading have become important features of software development. While multitasking is transparently supported by most operating systems, you get to decide whether to use multithreading or not when you write your own code. - eBook - PDF

Software Development for Embedded Multi-core Systems

A Practical Guide Using Embedded Intel Architecture

- Max Domeika(Author)

- 2011(Publication Date)

- Newnes(Publisher)

www.newnespress.com 175 Parallel Optimization Using Threads is either implemented by the programmer or provided by a library that abstracts some of this low level work must coordinate the threads. Nevertheless, designing for threads requires a disciplined process for best effect. In order to take advantage of threading, the software development process detailed in this chapter is recommended. The Threading Development Cycle (TDC) focuses on the specific needs and potential challenges introduced by threads. It is comprised of the following steps: 1. Analyze the application 2. Design and implement the threading 3. Debug the code 4. Performance tune the code This chapter is specific to cases where the OS provides software threads and shared memory although the steps detailed in the TDC can be applied in the more general case. Before taking a deeper look at the TDC, some basic concepts of parallel programming are explained. 6.1 Parallelism Primer The typical goal of threading is to improve performance by either reducing latency or improving throughput. Reducing latency is also referred to as reducing turnaround time and means shortening the time period from start to completion of a unit of work. Improving throughput is defined as increasing the number of work items processed per unit of time. 6.1.1 Thread A thread is an OS entity that contains an instruction pointer , stack, and a set of register values. To help in understanding, it is good to compare a thread to a process. An OS process contains the same items as a thread such as an instruction pointer and a stack, but in addition has associated with it a memory region or heap. Logically, a thread fits inside a process in that multiple threads have different instruction pointers and stacks, but share a heap that is associated with a process by the OS. Threads are a feature of the OS and require the OS to share memory which enables sharing of the heap. - eBook - ePub

- Daniel J. Duffy, Andrea Germani(Authors)

- 2013(Publication Date)

- Wiley(Publisher)

24 Introduction to Multi-threading in C# 24.1 INTRODUCTION AND OBJECTIVESIn this part of the book we introduce a number of design and software tools to help developers take advantage of the computing power of multi-core processors and multi-processor computers . These computers support parallel programming models. The main reason for writing parallel code is to improve the performance (called the speedup ) of software programs. Another advantage is that multi-threaded code can also promote the responsiveness of applications in general.We introduce a new programming model in this chapter. This is called the multi-threading model and it allows us to write software systems whose tasks can be carried out in parallel. This chapter is an introduction to multi-threaded programming techniques. We introduce the most important concepts that we need to understand in order to write multi-threaded applications in C#. A thread is a single sequential flow of control within a program. However, a thread itself is not a program. It cannot run on its own, but instead it runs within a program. A thread also has its own private data and it may be able to access shared data.When would we create multi-threaded applications? The general answer is performance. Some common scenarios are:- Parallel programming : much of the code in computational finance implements compute intensive algorithms whose performance (called the speedup ) we wish to improve by using a divide-and-conquer strategy to assign parts of the algorithms to separate processors. A discussion of the divide-and-conquer and other parallel design patterns is given in Mattson, Sanders and Massingill 2005.

- Simultaneous processing of requests

- eBook - ePub

Learn C# Programming

A guide to building a solid foundation in C# language for writing efficient programs

- Marius Bancila, Raffaele Rialdi, Ankit Sharma(Authors)

- 2020(Publication Date)

- Packt Publishing(Publisher)

At the same time, blocking the code execution is also not acceptable and therefore a different strategy is required. This domain of problems is categorized under asynchronous programming and requires slightly different tools. In this chapter, we will learn the basics of multithreading and asynchronous programming and look specifically at the following: What is a thread? Creating threads in.NET Understanding synchronization primitives The task paradigm By the end of this chapter, you will be familiar with multithreading techniques, using primitives to synchronize code execution, tasks, continuations, and cancellation tokens. You will also understand what the potentially dangerous operations are and the basic patterns to use to avoid problems when sharing resources among multiple threads. We will now begin familiarizing ourselves with the basic concepts needed to operate with multithreading and asynchronous programmin g. What is a thread? Every OS provides abstractions to allow multiple programs to share the same hardware resources, such as CPU, memory, and input and output devices. The process is one of those abstractions, providing a reserved virtual address space that its running code cannot escape from. This basic sandbox avoids the process code interfering with other processes, establishing the basis for a balanced ecosystem. The process has nothing to do with code execution, but primarily with memory. The abstraction that takes care of code execution is the thread. Every process has at least one thread, but any process code may request the creation of more threads that will all share the same virtual address space, delimited by the owning process - eBook - ePub

- Nick Samoylov(Author)

- 2022(Publication Date)

- Packt Publishing(Publisher)

As you can see, there are many ways to get results from a thread. The method you choose depends on the particular needs of your application.Parallel versus concurrent processing

When we hear about working threads executing at the same time, we automatically assume that they literally do what they are programmed to do in parallel. Only after we look under the hood of such a system do we realize that such parallel processing is possible only when the threads are each executed by a different CPU; otherwise, they time-share the same processing power. We perceive them working at the same time only because the time slots they use are very short—a fraction of the time units we use in our everyday life. When threads share the same resource, in computer science, we say they do it concurrently .Concurrent modification of the same resource

Two or more threads modifying the same value while other threads read it is the most general description of one of the problems of concurrent access. Subtler problems include thread interference and memory consistency errors, both of which produce unexpected results in seemingly benign fragments of code. In this section, we are going to demonstrate such cases and ways to avoid them.At first glance, the solution seems quite straightforward: allow only one thread at a time to modify/access the resource, and that’s it. But if access takes a long time, it creates a bottleneck that might eliminate the advantage of having many threads working in parallel. Or, if one thread blocks access to one resource while waiting for access to another resource and the second thread blocks access to a second resource while waiting for access to the first one, it creates a problem called a deadlock . These are two very simple examples of possible challenges a programmer may encounter while using multiple threads.First, we’ll reproduce a problem caused by the concurrent modification of the same value. Let’s create a Calculator

Index pages curate the most relevant extracts from our library of academic textbooks. They’ve been created using an in-house natural language model (NLM), each adding context and meaning to key research topics.